In my experience it’s not really simple at all to take the approach you’re talking about, but fortunately - I think - the solution is far, far easier. But starting with why it’s difficult:

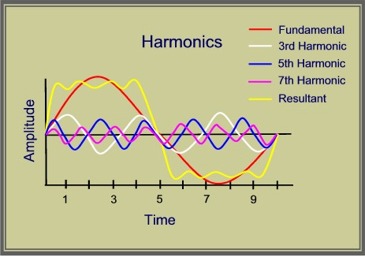

Imagine that you have an instrument with a very wide range of frequencies, a grand piano for example. As you can see from your chart it’ll cover about 30Hz to 15kHz (roughly). Now, the overtones are by definitions related to the fundamental, and that makes them “dynamic” in the sense that they “move” in relation to the fundamental frequency that is played. The first overtone of a 100Hz note is 200Hz, but the first overtone of a 500Hz note is 1kHz.

An EQ however is “static”, in the sense that it doesn’t move in relationship to the frequencies that it is processing. If I set my EQ to cut at 1kHz that will affect the first overtone of the 500 Hz fundamental note, but a different part of a 100Hz fundamental note. So it doesn’t really make much sense thinking about it that way (except in rare cases) in my experience. In order for an EQ to ‘follow’ you pretty much have to automate the EQ. On the other hand, that’s also what we do, but indirectly, by lowering the volume of instruments that get in the way.

I think the easier “approach” is just to user your ears and sweep the EQ until you get what you think sounds good. You can do it by adding content to an instrument, or by cutting in another to make space. It’s obviously pretty unscientific, but once you’re used to it it’s fast enough, not to mention an approach tested and proven over decades by great engineers.

Another issue is that EQs add phase smear, so it’s not that simple to dial in an EQ with a super tight Q and get a nice boost/cut of a particular frequency across a mix, or even instruments. It may be fine for a few, but once it adds up it can get nasty. On top of that it’s one of those things that one can get used to while working only to come back the next day with fresh ears and go “uh-oh, what did I do!?”…

So also on that second consideration where you’re looking at a key I would argue that it still doesn’t really make sense, at least not practically. In this case you can simply think of your overtone structure being based not on an instrument’s note - which moves around as I said above - but being based on chords - which also move around in most music. So even if you’re in A major and are looking at working on overtones based on that key, there will be different relationships for the very same frequencies depending on what chord is producing them. Just like 1kHz has a particular relationship to 500Hz if the latter is the fundamental, it will have a different relationship to a 175Hz fundamental. Add to that key changes which can occur very briefly. It’s totally possible to modulate to another key for only a bar or two.

The one instance when it’s necessary to pay attention to this is if you’re facing either a bad arrangement/composition, or a bad instrument. So, I’ve had issues where for example a recorded drum is slightly off pitch relative to the key of the song. The result to many people’s ears might not be that something is out of tune, but instead that the mix is ‘dense’. I’d say especially with low frequency notes where it’s harder to hear pitch this can happen. In those cases it makes total sense to EQ out things that aren’t in the key or chord you’re in, although often it can also be possible to just use brute force and pitch-shift the instrument causing the problem.

Anyway… those are my thoughts…

![]()