Dear Dorico team,

First, thank you for your remarkable work. I’m a composer and long-time Finale user now transitioning to Dorico, and I greatly appreciate your attention to user feedback, your elegant architecture, and the excellent documentation (especially in French, which is rare and very, very helpful).

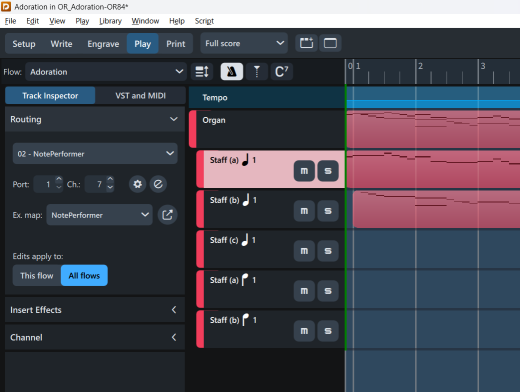

I understand and respect the core philosophy of Dorico — the clear mapping of player → instrument → sound (→ MIDI channel), which serves traditional notation and publishing standards very well. However, I’d like to point out a conceptual limitation that becomes significant in certain contemporary and hybrid compositional contexts.

The issue

Some instruments are inherently multitimbral, meaning that a single performer is expected to control multiple timbres simultaneously. Examples include:

- Organs, with manuals and pedals playing independent voices or registrations.

- Synthesizers, samplers, or custom-built electronic instruments with layered or split sounds.

- Electroacoustic or experimental setups, where different voices trigger different patches or signal paths (e.g., in Reaktor, Usine, or modular environments).

- Extended instrumental practices, where a single player is controlling multiple sonic identities, sometimes across MIDI channels.

In Finale, this was manageable via four independent layers, each assignable to its own MIDI channel, allowing precise polyphonic and multitimbral control within one staff. In Dorico, I understand that layers have been replaced with a more flexible voice model — which is excellent for engraving — but there’s currently no way to route voices within a single instrument to different MIDI channels.

What would be helpful

Without departing from Dorico’s elegant model, here are some things that would significantly improve usability for multitimbral instruments:

- Allowing voice-specific MIDI channel routing (perhaps from the Play Mode or via expression maps).

- Or introducing the idea of “sub-instruments” or “voice timbres” inside a single instrument, each with its own channel, but grouped under a single player.

- Or simply offering a way to make MIDI output channels configurable per voice, similar to what Cubase allows within a single MIDI region.

Additionally — and crucially — there should be a way to visually reflect this in the score, as a registration change, patch name, or other marker for the performer. Even if MIDI output were more flexible, it would not suffice without visual notation of the timbral change.

Conclusion

Of course I’m not asking for a complete rework of Dorico’s model, just a more flexible and modern approach to multitimbral playing, especially important for contemporary composition, live electronics, and notations involving electronic instruments.

This would make Dorico not just the best tool for traditional publishing, but also a robust environment for current and future compositional practices.

Thank you for your attention and continued development.

Best regards,

Vincent