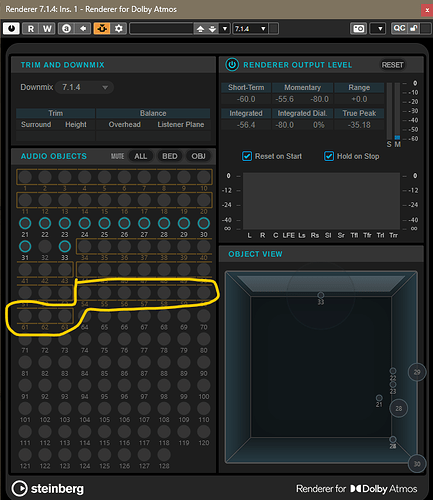

A primary function of assigning a sound or instrument to an object is that in the Atmos renderer you have mix control over the binaural sound for headphone mixing. By assigning an object’s binaural location to off (true stereo), near, mid or far you have a lot of control over the final binaural headphone mix.

Listening on Apple products with Airpods via Apple Music they are using their own algorithm called Apple Spatial and disregard the binaural metadata (another conversation.) On Amazon Music, Tidal and Apple Music with wired headphones the listener receives the Atmos mix via a binaural playback based on the mix engineers choices of off, near, mid and far.

The objects allow the most flexibility of that binaural translation. For example a drum kit is set to off to create the most in your face drum sound we tend to enjoy in many genres while perhaps a synth, organ or pad part is set to mid or even far to add a sense of depth and dimension to the part adding a more 3d space to the headphone mix. Perhaps you could send the drums to the bed and assign the pad to the object.

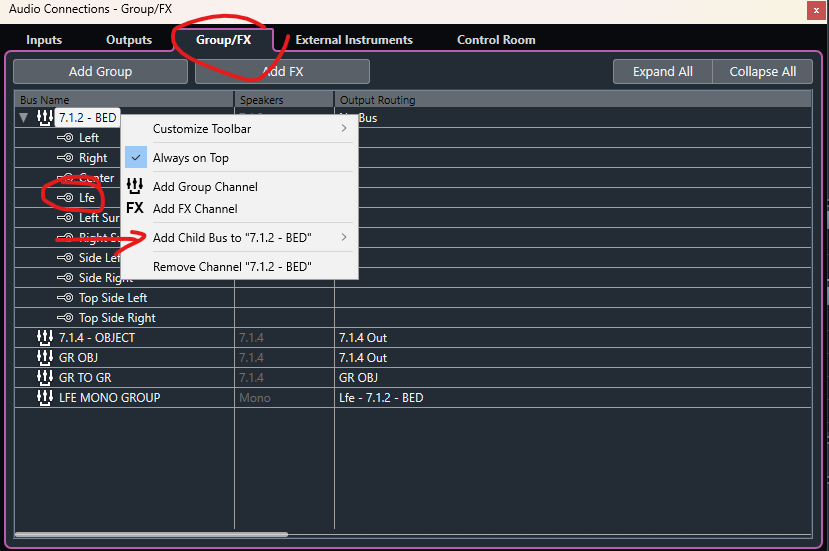

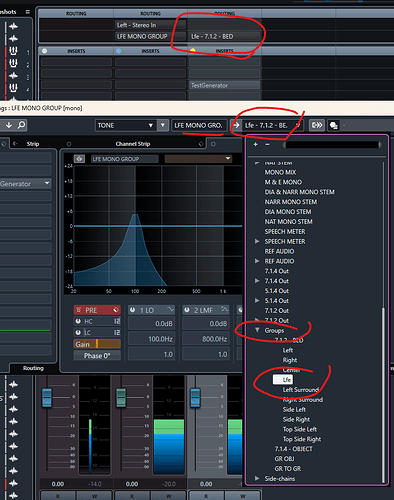

Another common approach in music mixing is use of Object Beds which is an approach that Steve Genewick of Capitol Studios helped to create. That is a system of creating stereo pairs of objects assigned to “zones” in other words. Front = L R, Wide = Wr, Wl, Side = Ssr, Ssl, Rear = Rsr, Rsl, Top Front = Tfr, Tfl, Top Rear = Trr, Trl. This allows some unique blends to add something to the front and blend in the rear channels to “pull the sound into the room.” An added benefit to this approach is to create object zones that can have different binaural settings to enhance the binaural headphone mix. An added benefit to this approach can be managing system resources…ie less objects.